Lecture 12

Data Preparation and Management

September 25, 2024

Technologies Used to Manage and Process Big Data

Technologies Used to Manage and Process Big Data

- Definition of Big Data

- Data sets so large and complex that traditional data management tools are inadequate.

- The Need for Advanced Technologies

- Emerging technologies are essential to manage, process, and analyze big data effectively.

- Overview of Topics

- Data Warehouses

- Extract, Transform, Load (ETL) Process

- Data Marts

- Data Lakes

- Hadoop

Technologies Used to Manage and Process Big Data

Challenges with Traditional Data Management

- Limitations

- Traditional software and hardware can’t handle the volume, velocity, and variety of big data.

- Impact

- Inability to store massive data volumes efficiently.

- Difficulty in processing and analyzing data in a timely manner.

- Solution

- Adoption of new technologies specifically designed for big data management.

Data Warehouses, Data Marts, and Data Lakes

Data Warehouses

- Definition

- A large database that holds business information from many sources across the enterprise.

- Purpose

- Supports decision-making processes by providing a comprehensive view of the organization’s data.

Data Warehouses, Data Marts, and Data Lakes

Data Warehouses Characteristics

| Characteristic | Description |

|---|---|

| Large | Holds billions of records and petabytes of data |

| Multiple Sources | Data comes from many internal and external sources via the ETL process |

| Historical | Typically contains data spanning 5 years or more |

| Cross-Organizational Access and Analysis | Data accessed and analyzed by users across the organization to support multiple business processes and decision-making |

| Supports Various Analyses and Reporting | Enables drill-down analysis, metric development, trend identification |

Data Warehouses, Data Marts, and Data Lakes

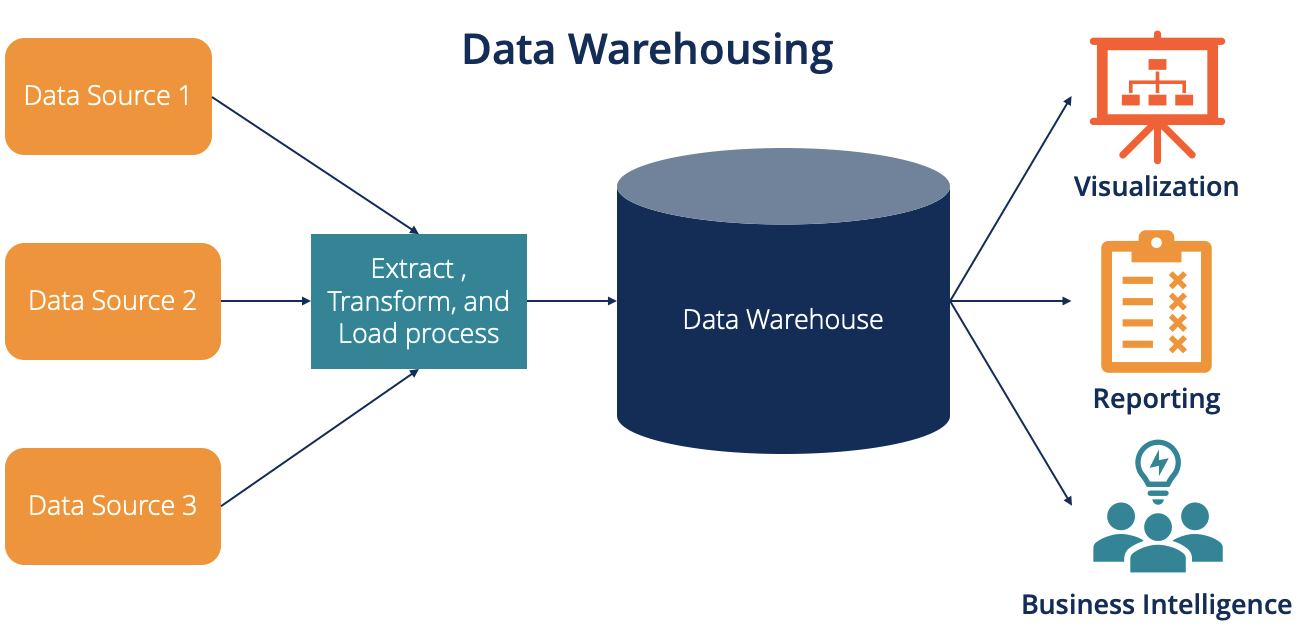

Data Warehouse Architecture

- Data Sources

- Internal Systems: Online transaction processing systems, customer relationship management, enterprise resource planning.

- External Systems: Social media, government databases, etc.

- ETL Process

- Extract, Transform, Load (to be discussed in detail).

- Data Storage

- Centralized repository optimized for query and analysis.

- Data Access

- Used by various departments for reporting, analysis, and decision-making.

Data Warehouses, Data Marts, and Data Lakes

Examples of Data Warehouse Usage

- Walmart

- Early adopter; used data warehouse to gain a competitive advantage in supply chain management.

- Held transaction data from over 11,000 stores and 25,000 suppliers.

- First commercial data warehouse to reach 1 terabyte in 1992.

- Collects data over 2.5 petabytes per hour in 2024.

Data Warehouses, Data Marts, and Data Lakes

Examples of Data Warehouse Usage

- WHOOP Wearable Device

- Collects massive biometric data from athletes.

- Data warehouse stores sensor data collected 100 times per second.

- Provides insights on strain, recovery, and sleep to optimize performance.

Data Warehouses, Data Marts, and Data Lakes

Examples of Data Warehouse Usage

- American Airlines

- Flight attendants access customer data to enhance in-flight service.

- Ability to resolve issues by offering free miles or travel vouchers based on customer history.

Data Warehouses, Data Marts, and Data Lakes

Data Quality in Data Warehouses

- Challenges

- Data Inconsistencies: Duplicate or missing data leading to incorrect analyses.

- Dirty Data: Inaccurate, incomplete, or outdated information.

- Misleading statistics: “Garbage in, garbage out”

- Importance

- Ensuring high data quality is critical to avoid misleading conclusions.

- Solution

- Implement robust ETL processes to cleanse and standardize data.

Extract, Transform, Load (ETL) Process

Overview of ETL Process

- Purpose

- Integral process for populating data warehouses with clean, consistent data.

- Stages

- Extract

- Collecting data from multiple sources.

- Transform

- Cleaning and converting data into a suitable format.

- Load

- Inserting transformed data into the data warehouse.

- Extract

Extract, Transform, Load (ETL) Process

Extract Stage

- Data Sources

- Various internal and external systems.

- Process

- Data Extraction: Pulling data from different systems.

- Data Validation: Rejecting data that doesn’t meet quality standards (e.g., missing values, invalid formats).

- Challenges

- Dealing with heterogeneous data formats and structures.

Extract, Transform, Load (ETL) Process

Transform Stage

- Purpose

- Convert extracted data into a standardized format suitable for analysis.

- Processes

- Data Cleaning: Correcting errors and inconsistencies.

- Data Integration: Combining data from different sources.

- Data Aggregation: Summarizing data to reduce processing time.

- Example Transformation:

- Converting customer address information into a sales district or census tract.

Extract, Transform, Load (ETL) Process

Load Stage

- Process

- Data Loading: Inserting transformed data into the data warehouse.

- Indexing: Creating indices to improve query performance.

- Constraint Checking: Ensuring data integrity according to database schema.

- Challenges

- The loading process for large data warehouses can take days.

- Outcome

- The data warehouse is updated with high-quality, consistent data ready for analysis.

Data Marts

Definition of Data Marts

- Subset of Data Warehouse

- Focused on a specific business area or department.

- Purpose

- Provides relevant data to a specific group without the complexity of the entire data warehouse.

- Advantages

- Cost-Effective: Less expensive to implement and maintain.

- Faster Access: Optimized for departmental needs.

- Simplified Analysis: Easier to query and analyze due to reduced data volume.

Data Marts

Data Mart Use Cases

- Departmental Focus

- Finance: Financial reporting and analysis.

- Marketing: Customer segmentation and campaign analysis.

- Inventory: Stock levels and supply chain management.

- Small to Medium-Sized Businesses

- An affordable alternative to a full-scale data warehouse.

Data Marts

Data Marts vs. Data Warehouses

| Feature | Data Mart | Data Warehouse |

|---|---|---|

| Scope | Specific department or business area | Entire enterprise |

| Data Volume | Smaller | Larger |

| Complexity | Less complex | More complex |

| Implementation Time | Shorter | Longer |

| Cost | Lower | Higher |

Data Lakes

Definition of Data Lakes

- “Store Everything” Approach

- Stores all data in its raw, unaltered form.

- Purpose

- Provides a centralized repository for all data, accommodating future analytical needs.

- Characteristics

- Data Variety: Includes structured, semi-structured, and unstructured data.

- Flexibility: Data is available for any type of analysis at any time.

Data Lakes

Data Lakes vs. Data Warehouses

| Feature | Data Lake | Data Warehouse |

|---|---|---|

| Data Processing | Schema-on-read (processed when accessed) | Schema-on-write (processed before storage) |

| Data State | Raw, unprocessed | Cleaned, transformed |

| Data Types | All data types | Primarily structured |

| Flexibility | High | Moderate |

Data Lakes

Case Study: Bechtel Corporation

- About Bechtel

- Global engineering, construction, and project management company.

- Implementation

- Built a 5-petabyte data lake consolidating years of project data.

Data Lakes

Case Study: Bechtel Corporation

- Benefits

- Historical Insights: Access to data from hundreds of projects worldwide.

- Improved Forecasting: Better predictions of project outcomes.

- Cost Reduction: Identifying inefficiencies to cut costs.

- Competitive Advantage: Enhanced ability to win new contracts through data-driven insights.

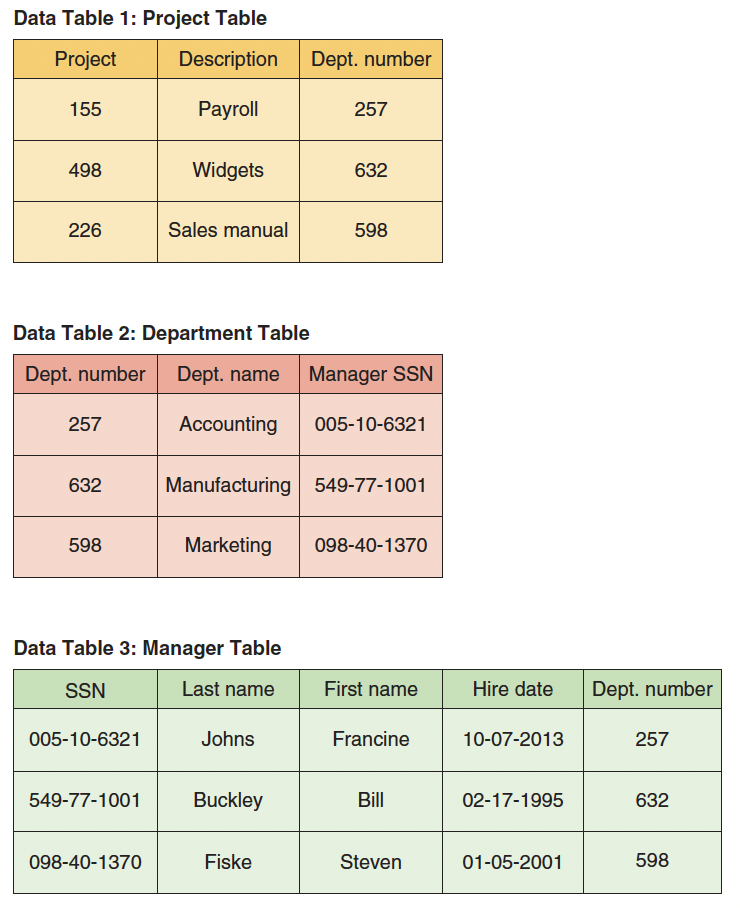

Relational Databases

- Sometimes, one data set is scattered across multiple files.

- The size of the files can be huge.

- The data collection process can be scattered across time and space.

- E.g.,

data.framefor county-level data anddata.framefor geographic information, such as longitude and latitude.

- Sometimes we want to combine two or more

data.framebased on common data values in thosedata.frame.- This task is known in the database world as performing a “join.”

Relational Databases

- The relational database model is a simple but highly useful way to organize data into collections of two-dimensional tables called relations.

Relational Databases

Characteristics

- Data is organized into collections of two-dimensional tables called relations.

- Each row in the table represents an entity and each column represents an attribute of that entity.

- Each row in a table is uniquely identified by a key.

- The key variable enables relationships between the tables to be defined.

- User queries are used to perform operations on the database like adding, changing, or deleting data and selecting, and joining existing data in existing tables.