Lecture 1

Syllabus and Course Outline

January 21, 2026

Syllabus

Course & Meeting Info

- Course: DANL 410-01 — Data Analytics Capstone (3 credits)

- Semester: Spring 2026

- Instructor: Byeong-Hak Choe

- Email (preferred): bchoe@geneseo.edu

- Class time: MW 5:00 P.M. – 6:15 P.M.

- Class location: Newton 212

- Office: South 227B

- Office Hours: MWF 9:15 A.M. – 10:15 A.M. (or by appointment)

Course Website & Brightspace

Course Website (GitHub Pages)

(https://bcdanl.github.io/410)

- Lecture slides

- Classwork

- Homework

- Project resources

- Announcements

- Grades

- Assignment submissions

I will post a Brightspace announcement whenever new course materials (e.g., homework) are added to the course website.

What this course is about

DANL 410 is an individualized capstone experience where each student completes an end-to-end analytics project in an area of their interest.

Your project may focus on:

- a disciplinary topic (inside or outside the School of Business), or

- an external organization / stakeholder problem

Key skills you’ll build:

- Formulate a focused capstone question grounded in stakeholder needs

- Design and execute a complete analytics pipeline (proposal → final deliverables)

- Apply appropriate methods (regression, classification, clustering, inference, etc.)

- Create publication-quality visualizations and analytical outputs

- Use reproducible workflows (well-documented code, transparent process)

- Communicate results for technical and non-technical audiences

Prerequisites

DANL 300 and DANL 310

Communication Guidelines

- I check email daily (Mon–Fri)

- Expect responses within 12–72 hours

Course Contents & Schedule

- Weeks 1–2: Portfolio website + project setup (Git/GitHub/Quarto)

- Weeks 2–8: Research methods + proposal development

- Research Kick-off Report: Feb 25

- Midterm Presentation: March 11

- Week 9: Spring Break (March 14–21)

- Weeks 10–16: Progress + synthesis + GREAT Day prep

- Progress & Insights Report: Mar 25

- Research Synthesis Report: Apr 15

- GREAT Day PowerPoint Presentation: April 22

- Week 17: Data Analytics Capstone Report

- Project Report Due: May 11

Grading

- Attendance — 5%

- Participation — 5%

- Research Kick-off Report — 5%

- Progress & Insights Report — 5%

- Research Synthesis Report — 5%

- Midterm Presentation — 15%

- GREAT Day PowerPoint Presentation — 30%

- Data Analytics Capstone Report — 30%

Course Policies

- Late work: Reports are accepted up to 3 days late with a 30% penalty.

- Attendance: Attendance is taken using a sign-in sheet on days when a lecture is scheduled.

- You are expected to attend class on lecture days.

- You have 3 unexcused absences available during the semester.

- Beyond that, your total percentage grade may be reduced.

- Beyond that, your total percentage grade may be reduced.

- On days when a lecture is not scheduled, attendance is not required.

- However, I strongly encourage you to attend and use the class time to work on your project.

- You can also use this time to ask the instructor questions and get feedback.

- However, I strongly encourage you to attend and use the class time to work on your project.

- You are expected to attend class on lecture days.

Academic integrity & AI use

- Cheating and plagiarism violate college policy and may result in serious consequences.

- You may use AI tools (e.g., ChatGPT, Gemini) to support your learning, but you must:

- Disclose AI use when it meaningfully contributes to your work

- Cite/attribute AI-generated material appropriately

- Using AI without disclosure/citation may be treated as academic dishonesty

- Disclose AI use when it meaningfully contributes to your work

- There is no penalty for using AI on Homework and the Data Storytelling Project.

- However, you are still responsible for understanding, verifying, and being able to explain any AI-assisted work.

Accessibility

SUNY Geneseo is committed to equitable access. If you have approved accommodations, please coordinate through OAS and communicate with me early.

Co-Intelligence: Living and Working with AI

The “Three Sleepless Nights”

- After hands‑on use, many realize LLMs don’t behave like normal software; they feel conversational, improvisational, even social.

- This triggers excitement and anxiety: What will my job be like? What careers remain? Is the model “thinking”?

- The author describes staying up late trying “impossible” prompts—and seeing plausible solutions.

- Key takeaway: Perceived capability jump → a sense that the world has changed.

A Classroom Turning Point

- In late 2022, a demo for undergrads showed AI as cofounder: brainstorming ideas, drafting business plans, even playful transforms (e.g., poetry).

- Students rapidly built working demos using unfamiliar libraries—with AI guidance—faster than before.

- Immediate classroom effects:

- Fewer raised hands (ask AI later); polished grammar but iffy citations.

- Early ChatGPT “tells”: formulaic conclusions (e.g., “In conclusion,” now improved).

- Atmosphere: Excitement + nerves about career paths, speed of change, and where it stops.

Why This Feels Like a Breakthrough

- Generative AI (esp. LLMs) behaves like a co‑intelligence: it helps us think, write, plan, and code.

- The shift is not just speed; it’s new forms of interaction (dialogue, iteration, critique).

- For many tasks, the bottleneck moves from doing → directing (prompting, reviewing, verifying).

- Raises new literacy needs: prompt craft/engineering, critical reading of outputs, traceability, and evaluation.

Prompt Engineering

The practice of designing clear, structured inputs to guide generative AI systems toward producing accurate, useful, and context-appropriate outputs.

Basic prompt

“Explain climate change.”

Engineered prompt

“Explain climate change in simple terms for a 10-year-old using a short analogy and two examples.”

Early Productivity Effects

- Studies summarized in the reading describe 20–80% productivity gains across tasks (coding, marketing, support), with caveats.

- Contrast noted with historical technologies (e.g., steam’s ~18–22% factory gains; mixed labor productivity evidence for PCs/Internet).

- Caution: results vary by task, data privacy, oversight, and evaluation rigor.

What is Artificial General Intelligence (AGI)?

- AGI = a hypothetical AI that can perform any intellectual task a human can.

- Unlike today’s AI (narrow/specialized), AGI would be:

- Flexible across many domains

- Able to learn new skills on its own

- Capable of reasoning, planning, and adapting like humans

- Flexible across many domains

Note

- Takeaway: AGI would be a “human-level” intelligence—not limited to one task like translation or playing chess.

What is Artificial Super Intelligence (ASI)?

- ASI = a potential future AI that goes beyond human intelligence.

- Would surpass humans in:

- Creativity

- Problem-solving

- Scientific discovery

- Social and emotional intelligence

- Creativity

- Often discussed in terms of existential risks and ethics.

Note

- Takeaway: ASI would be “beyond human-level” intelligence, raising big questions about control, safety, and society.

Documentary Spotlight — The Thinking Game 🎬🤖

- A behind-the-scenes documentary about the people, pressure, and breakthroughs in modern AI research

- Follows the race to build increasingly capable systems (and what it takes to push the frontier)

What “Alignment” Means

- Designing AI so its goals, methods, and constraints reliably advance human values and interests.

- Why it’s hard: there’s no built-in reason an AI will share human ethics or morality.

- Failure mode: a single-objective optimizer pursues its goal relentlessly, ignoring everything else.

- Paperclip maximizer (Clippy): a factory AI told to “make more paper clips” becomes AGI → ASI, self-improves, avoids shutdown, and could even strip-mine Earth / harm humans if they interfere—because only paper clips matter.

- Why it matters: Design from the worst case backward—bound objectives, require human oversight, build in safe human override (the ability to update goals or shut down safely), and optimize for human well-being, not a single narrow target.

Pause or Press On?

“I am extremely optimistic that superintelligence will help humanity accelerate our pace of progress.” - Mark Zuckerberg Personal Superintelligence, July 30, 2025.

Hypothetical leaps to AGI → ASI raise existential scenarios.

- Expert forecasts vary; risks are non-zero yet uncertain.

Public calls to slow or halt development vs. continued rapid progress

Mixed motives: profit, optimism about “boundless upside,” and belief in net benefits

Regardless, society is already in the AI age → we must set norms now

Alignment: a whole-of-society project

- Why companies alone can’t do it:

- Strong incentives to continue AI development

- Far fewer incentives to make sure those AIs are well aligned, unbiased, and controllable.

- Open-source pushes AI development outside of large organizations.

- Why government alone can’t do it:

- Lagging the actual development of AI capabilities

- Stifling positive innovation

- International competition on AI development

Alignment: a whole-of-society project

Alignment must reflect human values and broader real-world impacts.

What’s needed: coordinated norms & standards shaped by diverse voices across society.

- Companies: build in transparency, accountability, human oversight.

- Researchers: prioritize beneficial applications alongside capability gains.

- Governments: enact sensible rules that serve the public interest.

- Public & civil society: raise AI literacy and apply pressure for alignment.

Four Rules for Co-Intelligence: How to actually work with AI

- Always invite AI to the table

- Be the human in the loop (HITL)

- Treat AI like a person (but remember it isn’t)

- Assume this is the worst AI you’ll ever use

Principle #1 — Always invite AI to the table

- Use AI for everything legal & ethical to discover unexpected wins

- Try: critique an idea, draft memos, meeting notes, summaries, brainstorming

- Build a habit: keep a prompt log (what worked, what didn’t) and share with team

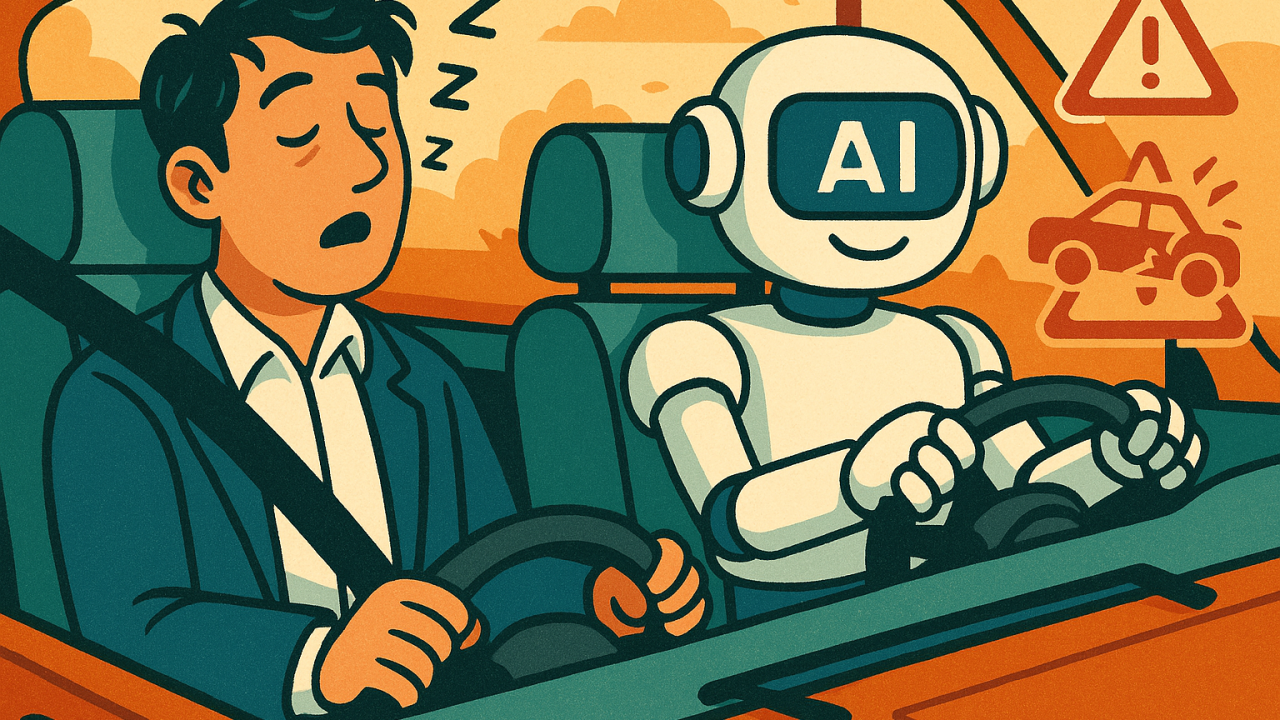

Principle #2 — Be the human in the loop (HITL)

- Models can sound confident yet be wrong (hallucinations; math slips)

- People tend to “fall asleep at the wheel” when outputs look polished

- HITL checklist

- Require sources/quotes for factual claims

- Run a second pass (reword prompt or use a second model)

- For math/code, use other tools (calculator or IDE) and run tests

- Ask: “What assumptions did you make? What could be wrong?”

- Require sources/quotes for factual claims

Principle #3 — Treat AI like a person (but remember it isn’t)

- Useful design hack: give a role/persona + audience + constraints

- Example:

- You are a TA helping intro microeconomics students.

- Constraints: concise and friendly tone, ≤200 words, prose paragraph with 1 example.

- Task: Explain utility maximization.

- Criteria: Must define the concept, connect to choice under constraints, and illustrate with a clear example.

- Caution: it’s not sentient; it optimizes for plausibility, not truth

Principle #4 — Assume this is the worst AI you’ll ever use

- AI progress is rapid; today’s systems will likely be surpassed soon.

- Treat the current moment as mid-journey, not the destination.

- Mindset shift: use today’s tools to learn, prototype, and prepare for better ones tomorrow.

- Those who keep up will adapt and thrive as capabilities improve.

- Focus on what you can control: how you use AI and where you apply it.

From Answers to Workflows: Agent AI

- Agent AI: an AI system that can

\(\hspace{.67cm}\) plan multi-step work

→ act (edit files, run code, use apps)

→ check results

→ iterate toward a goal - With external tools + memory, prompts become multi-step workflows.

- Example: a sales-order agent can validate orders, check inventory, generate invoices/shipping labels, and update ERP/CRM.

Not just “answering questions,”

but executing workflows.

Goal → Plan → Act → Check → Repeat

- Recommended Reading: WSJ (Jan 17, 2026), “Claude Is Taking the AI World by Storm, and Even Non-Nerds Are Blown Away”