import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.preprocessing import scale # zero mean & one s.d.

from sklearn.linear_model import LassoCV, lasso_path

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_errorHomework 4 - Part 1: Lasso Linear Regerssion - Model 1

Beer Markets with Big Demographic Design

beer = pd.read_csv("https://bcdanl.github.io/data/beer_markets_xbeer_xdemog.zip")

#beer = pd.read_csv("https://bcdanl.github.io/data/beer_markets_xbeer_brand_xdemog.zip")

#beer = pd.read_csv("https://bcdanl.github.io/data/beer_markets_xbeer_brand_promo_xdemog.zip")X = beer.drop('ylogprice', axis = 1)

y = beer['ylogprice']X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

y_train = y_train.values

y_test = y_test.values# LassoCV with a range of alpha values

lasso_cv = LassoCV(n_alphas = 100,

alphas = None, # alphas=None automatically generate 100 candidate alpha values

cv = 5,

random_state=42,

max_iter=100000)lasso_cv.fit(X_train.values, y_train)

#lasso_cv.fit(X_train.values, y_train.ravel())

print("LassoCV - Best alpha:", lasso_cv.alpha_)LassoCV - Best alpha: 8.170612772733373e-05# Create a DataFrame including the intercept and the coefficients:

coef_lasso_beer = pd.DataFrame({

'predictor': list(X_train.columns),

'coefficient': list(lasso_cv.coef_),

'exp_coefficient': np.exp( list(lasso_cv.coef_) )

})

# Evaluate

y_pred_lasso = lasso_cv.predict(X_test)

mse_lasso = mean_squared_error(y_test, y_pred_lasso)

print("LassoCV - MSE:", mse_lasso)LassoCV - MSE: 0.027828386344520374coef_lasso_beer_n0 = coef_lasso_beer[coef_lasso_beer['coefficient'] != 0]X_train.shape[1]2641coef_lasso_beer_n0.shape[0]394coef_lasso_beer_n0| predictor | coefficient | exp_coefficient | |

|---|---|---|---|

| 0 | logquantity | -0.142898 | 0.866842 |

| 1 | container_CAN | -0.054903 | 0.946577 |

| 2 | brandBUSCH_LIGHT | -0.258170 | 0.772464 |

| 4 | brandMILLER_LITE | -0.015062 | 0.985051 |

| 5 | brandNATURAL_LIGHT | -0.315142 | 0.729685 |

| ... | ... | ... | ... |

| 2557 | marketBOSTON:npeople5plus | -0.006056 | 0.993963 |

| 2563 | marketCOLUMBUS:npeople5plus | -0.032305 | 0.968211 |

| 2564 | marketDALLAS:npeople5plus | 0.045550 | 1.046603 |

| 2568 | marketEXURBAN_NJ:npeople5plus | 0.127266 | 1.135720 |

| 2628 | marketSACRAMENTO:npeople5plus | 0.016272 | 1.016405 |

394 rows × 3 columns

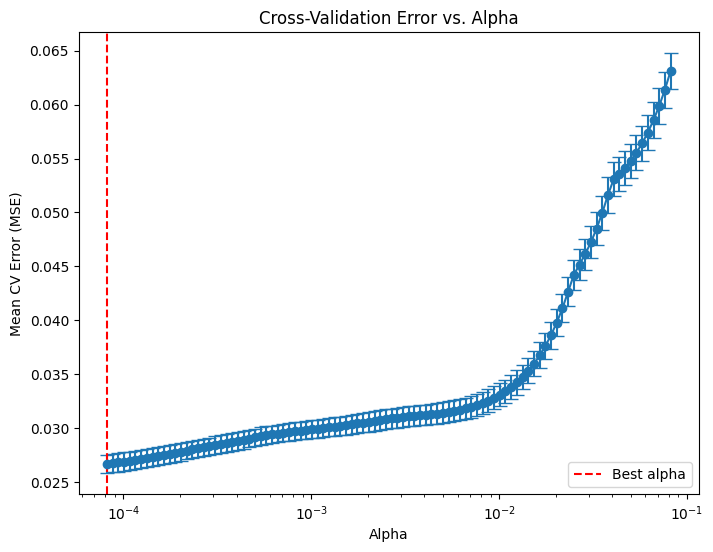

# Compute the mean and standard deviation of the CV errors for each alpha.

mean_cv_errors = np.mean(lasso_cv.mse_path_, axis=1)

std_cv_errors = np.std(lasso_cv.mse_path_, axis=1)

plt.figure(figsize=(8, 6))

plt.errorbar(lasso_cv.alphas_, mean_cv_errors, yerr=std_cv_errors, marker='o', linestyle='-', capsize=5)

plt.xscale('log')

plt.xlabel('Alpha')

plt.ylabel('Mean CV Error (MSE)')

plt.title('Cross-Validation Error vs. Alpha')

#plt.gca().invert_xaxis() # Optionally invert the x-axis so lower alphas (less regularization) appear to the right.

plt.axvline(x=lasso_cv.alpha_, color='red', linestyle='--', label='Best alpha')

plt.legend()

plt.show()

# Compute the coefficient path over the alpha grid that LassoCV used

alphas, coefs, _ = lasso_path(X_train, y_train,

alphas=lasso_cv.alphas_,

max_iter=100000)

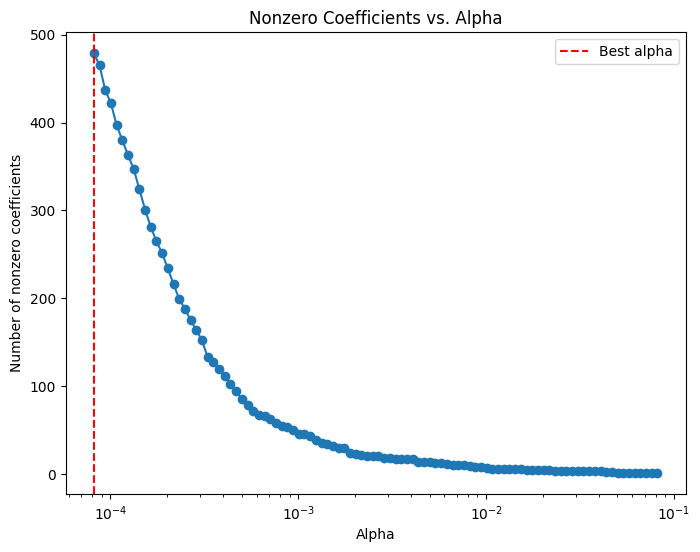

# Count nonzero coefficients for each alpha (coefs shape: (n_features, n_alphas))

nonzero_counts = np.sum(coefs != 0, axis=0)

# Plot the number of nonzero coefficients versus alpha

plt.figure(figsize=(8,6))

plt.plot(alphas, nonzero_counts, marker='o', linestyle='-')

plt.xscale('log')

plt.xlabel('Alpha')

plt.ylabel('Number of nonzero coefficients')

plt.title('Nonzero Coefficients vs. Alpha')

#plt.gca().invert_xaxis() # Lower alphas (less regularization) on the right

plt.axvline(x=lasso_cv.alpha_, color='red', linestyle='--', label='Best alpha')

plt.legend()

plt.show()

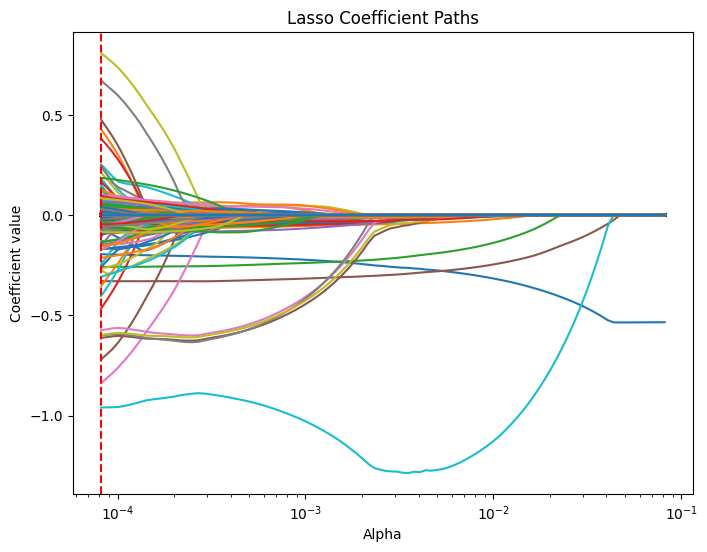

# Compute the lasso path. Note: we use np.log(y_train) because that's what you used in LassoCV.

alphas, coefs, _ = lasso_path(X_train, y_train,

alphas=lasso_cv.alphas_,

max_iter=100000)

plt.figure(figsize=(8, 6))

# Iterate over each predictor and plot its coefficient path.

for i, col in enumerate(X_train.columns):

plt.plot(alphas, coefs[i, :], label=col)

plt.xscale('log')

plt.xlabel('Alpha')

plt.ylabel('Coefficient value')

plt.title('Lasso Coefficient Paths')

#plt.gca().invert_xaxis() # Lower alphas (weaker regularization) to the right.

#plt.legend(bbox_to_anchor=(1.05, 1), loc='upper left')

plt.axvline(x=lasso_cv.alpha_, color='red', linestyle='--', label='Best alpha')

#plt.legend()

plt.show()