Lecture 5

Distributed Computing Framework; Apache Hadoop and Spark; PySpark

February 5, 2025

Distributed Computing Framework

Distributed Computing Framework (DCF)

- Massive Jigsaw Puzzle:

- Solving alone takes forever.

- Invite friends to work on different sections simultaneously.

- DCF Role:

- Acts like a team manager.

- Splits large problems into manageable tasks.

- Coordinates parallel work across multiple computers (nodes).

How DCF Works: The Process

- Task Distribution:

- Breaks problems into smaller tasks.

- Assigns tasks to different nodes in the network.

- Parallel Processing:

- Multiple nodes work at the same time.

- Like an assembly line where different parts are built simultaneously.

- Communication & Aggregation:

- Ensures nodes communicate effectively.

- Gathers individual results into a final solution.

Distributed Computing Framework (DCF)

Real-World Analogies

- Factory Manager:

- Each worker builds a part of a toy (arms, legs, wheels).

- The manager (DCF) ensures all parts come together to form a complete toy.

- Race Organizer:

- Different computers have varying speeds and capabilities.

- Tougher tasks are assigned to faster or more capable nodes.

Distributed Computing Framework (DCF)

Robustness and Scalability

- Fault Tolerance:

- Handles failures gracefully.

- If a node fails, tasks are reassigned or retried (like a substitute worker in a factory).

- Resource Allocation:

- Distributes tasks based on node capability.

- Optimizes efficiency across the network.

- Scalability:

- Easily adds more computers to the network.

- More helpers = faster puzzle solving.

Distributed Computing Framework (DCF)

- DCF is the Conductor of the Orchestra:

- Every musician (node) plays their part.

- The conductor (DCF) synchronizes the performance to create a harmonious final result.

- Key Benefits:

- Faster problem-solving through parallelism.

- Efficient management of tasks and resources.

- Resilient to failures and scalable for growing problems.

Distributed Computing Framework (DCF)

Real-World Examples

- Hadoop

- Apache Spark

Apache Hadoop

Hadoop

Introduction to Hadoop

- Definition

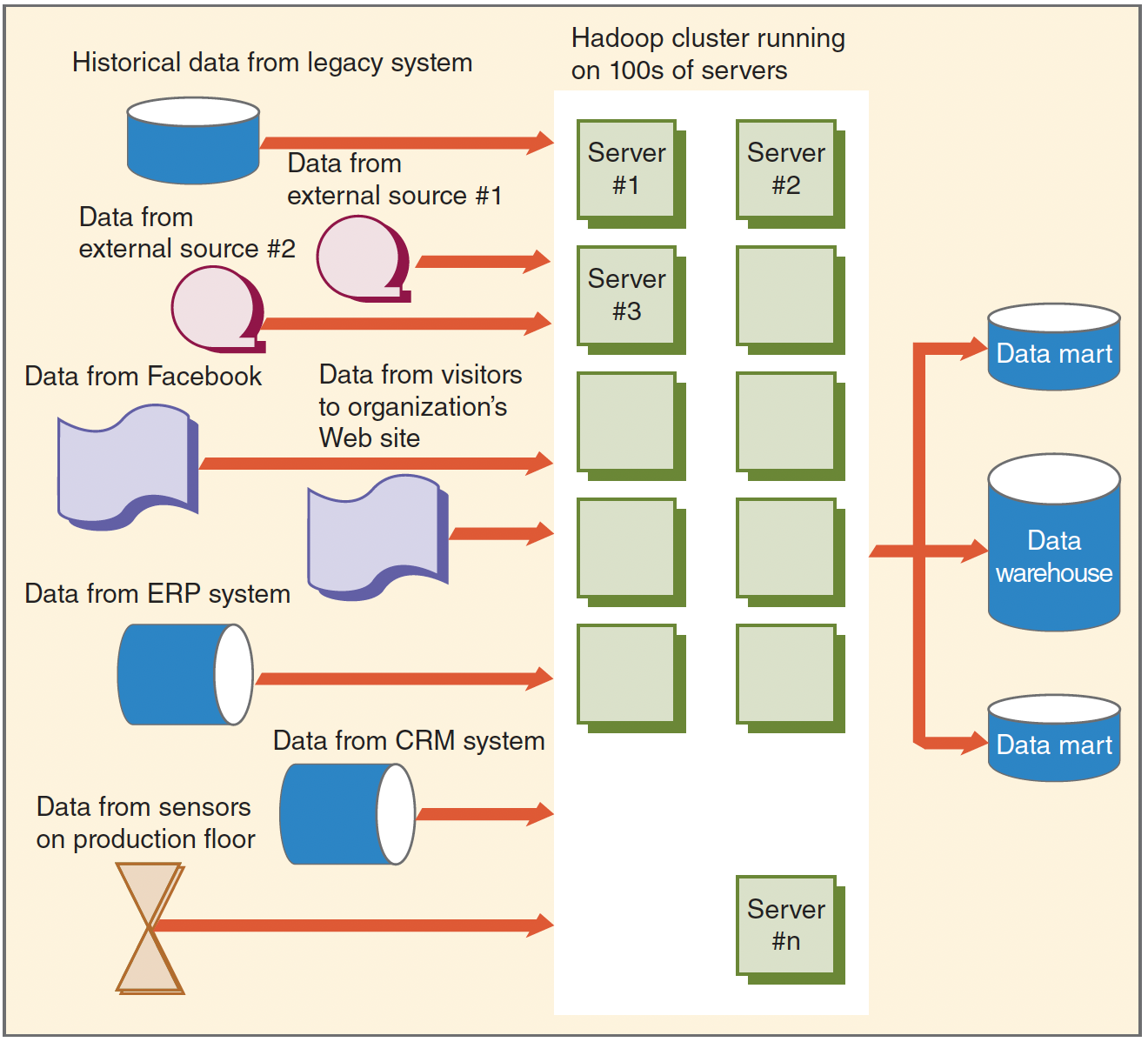

- An open-source software framework for storing and processing large data sets.

- Components

- Hadoop Distributed File System (HDFS): Distributed data storage.

- MapReduce: Data processing model.

Hadoop

Introduction to Hadoop

- Purpose

- Enables distributed processing of large data sets across clusters of computers.

Hadoop

Hadoop Architecture - HDFS

- HDFS

- Divides data into blocks and distributes them across different servers for processing.

- Provides a highly redundant computing environment

- Allows the application to keep running even if individual servers fail.

Hadoop

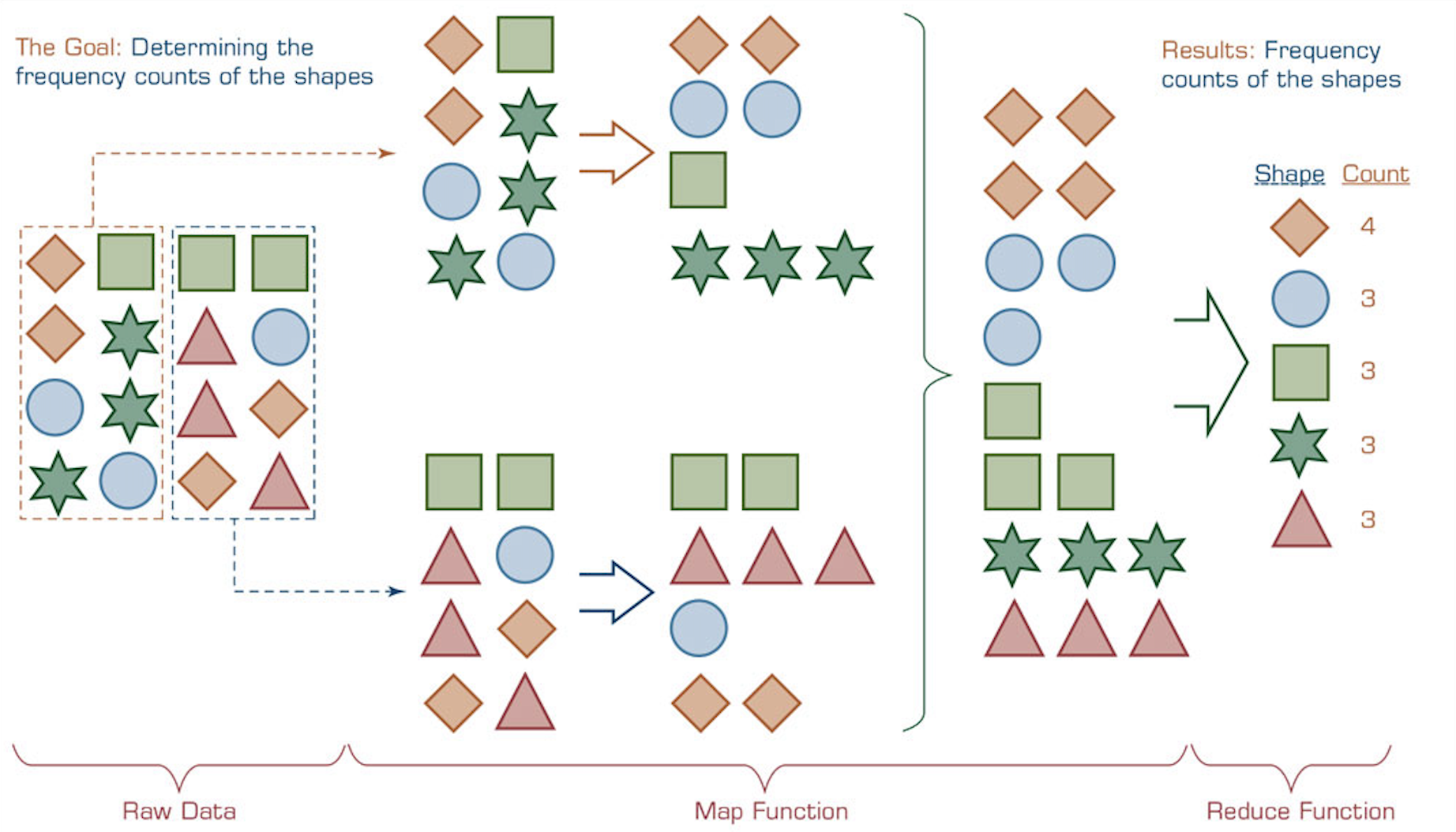

Hadoop Architecture - MapReduce

- MapReduce: Distributes the processing of big data files across a large cluster of machines.

- High performance is achieved by breaking the processing into small units of work that can be run in parallel across nodes in the cluster.

- Map Phase: Filters and sorts data.

- e.g., Sorting customer orders based on their product IDs, with each group corresponding to a specific product ID.

- Reduce Phase: Summarizes and aggregates results.

- e.g., Counting the number of orders within each group, thereby determining the frequency of each product ID.

Hadoop

Hadoop Architecture - MapReduce

Hadoop

How Hadoop Works

- Data Distribution

- Large data sets are split into smaller blocks.

- Data Storage

- Blocks are stored across multiple servers in the cluster.

- Processing with MapReduce

- Map Tasks: Executed on servers where data resides, minimizing data movement.

- Reduce Tasks: Combine results from map tasks to produce final output.

- Fault Tolerance

- Data replication ensures processing continues even if servers fail.

Hadoop

Extending Hadoop for Real-Time Processing

- Limitation of Hadoop

- Hadoop is originally designed for batch processing.

- Batch Processing: Data or tasks are collected over a period of time and then processed all at once, typically at scheduled times or during periods of low activity.

- Results come after the entire dataset is analyzed.

- Hadoop is originally designed for batch processing.

- Real-Time Processing Limitation:

- Hadoop cannot natively process real-time streaming data (e.g., stock prices flowing into stock exchanges, live sensor data)

- Extending Hadoop’s Capabilities

- Both Apache Storm and Apache Spark can run on top of Hadoop clusters, utilizing HDFS for storage.

Apache Spark

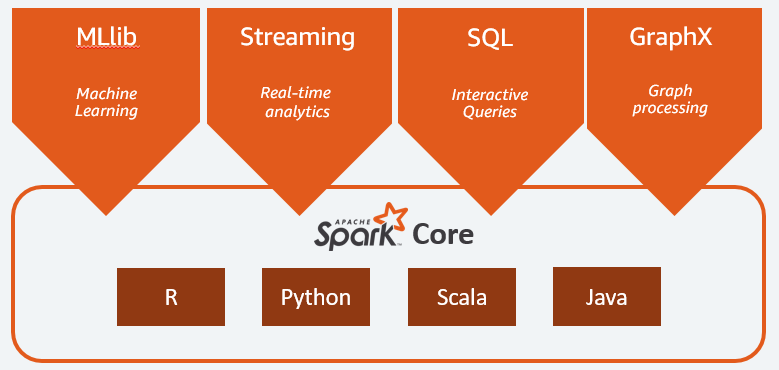

Spark

- Apache Spark: distributed processing system used for big data workloads. a unified computing engine and computer clusters

- It contains a set of libraries for parallel processing for data analysis, machine learning, graph analysis, and streaming live data.

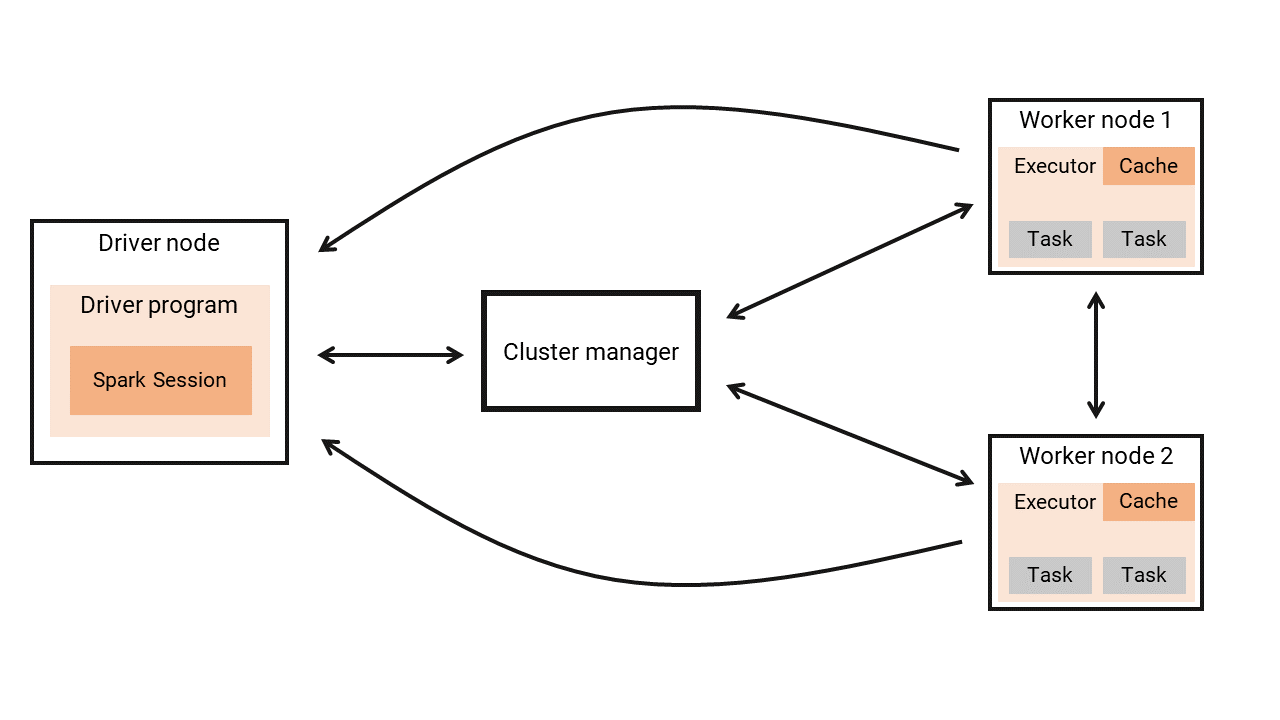

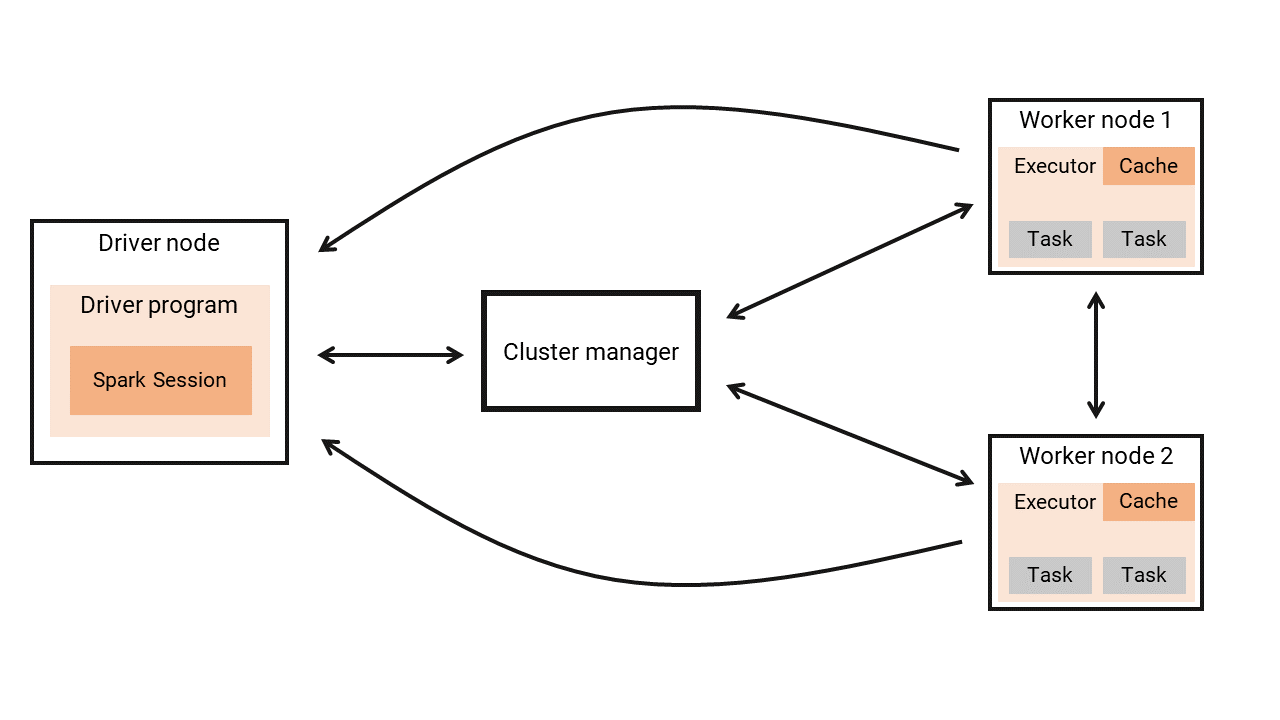

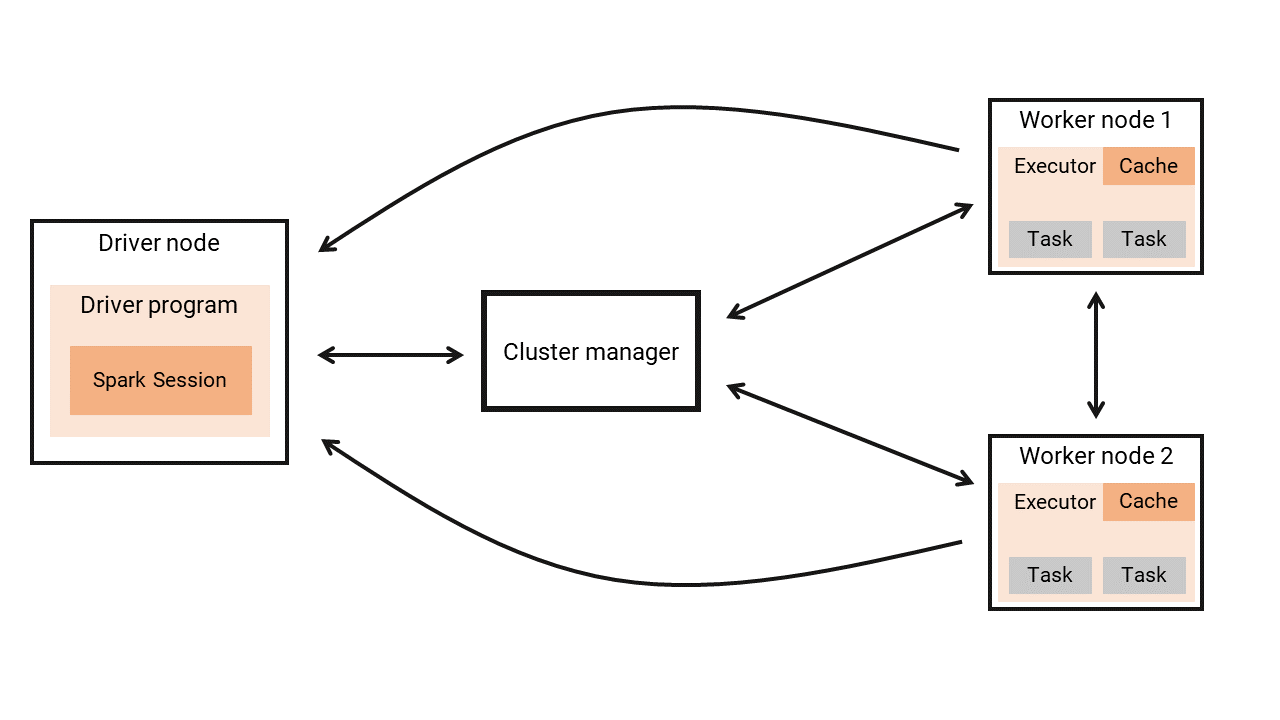

Spark Application Structure on a Cluster of Computers

- Driver Process

- Communicates with the cluster manager to acquire worker nodes.

- Breaks the application into smaller tasks if resources are allocated.

Spark Application Structure on a Cluster of Computers

- Cluster Manager

- Decides if Spark can use cluster resources (machines/nodes).

- Allocates necessary nodes to Spark applications.

Spark Application Structure on a Cluster of Computers

- Worker Nodes

- Execute tasks assigned by the driver program.

- Send results back to the driver after execution.

- Can communicate with each other if needed during task execution.

Spark vs. Hadoop

Hadoop MapReduce: The Challenge

- Sequential Multi-Step Process:

- Reads data from the cluster.

- Processes data.

- Writes results back to HDFS.

- Disk Input/Output Latency:

- Each step requires disk read/write.

- Results in slower performance due to latency.

Spark vs. Hadoop

Apache Spark: The Solution

- In-Memory Processing:

- Loads data into memory once.

- Performs all operations in-memory.

- Data Reuse:

- Caches data for reuse in multiple operations (ideal for iterative tasks like machine learning).

- Faster Execution:

- Eliminates multiple disk I/O steps.

- Dramatically reduces latency for interactive analytics and real-time processing.

Spark vs. Hadoop

Apache Hadoop

- Framework Components:

- HDFS: Distributed storage system.

- MapReduce: Programming model for parallel processing.

- Ecosystem:

- Typically integrates multiple execution engines (e.g., Spark) within a single deployment.

Spark

- Focus Areas:

- Interactive queries, machine learning, and real-time analytics.

- Storage Agnostic:

- Does not have its own storage system.

- Operates on data stored in systems like HDFS, etc.

- Integration:

- Can run alongside Hadoop

Spark vs. Hadoop

Complementary Use

Many organizations store massive datasets in HDFS and utilize Spark for fast, interactive data processing.

- Spark can read data directly from HDFS, enabling seamless integration between storage and computation.

Hadoop provides robust storage and processing capabilities.

Spark brings speed and versatility to data analytics, making them a powerful combination for solving complex business challenges.

Use Case Example: An e-commerce company might store historical sales data in HDFS while using Spark to analyze customer behavior in real time to recommend products or detect fraudulent transactions.

Apache Spark

Medscape: Real-Time Medical News for Healthcare Professionals

- A medical news app for smartphones and tablets designed to keep healthcare professionals informed.

- Provides up-to-date medical news and expert perspectives.

- Real-Time Updates:

- Uses Apache Storm/Spark to process about 500 million tweets per day.

- Automatic Twitter feed integration helps users track important medical trends shared by physicians and medical commentators.

PySpark on Google Colab

PySpark on Google Colab

PySpark = Spark + Python

pysparkis a Python API to Apache Spark.- API: application programming interface, the set of functions, classes, and variables provided for you to interact with.

- Spark itself is coded in a different programming language, called Scala.

- We can combine Python, pandas, and PySpark in one program.

- Koalas (now called

pyspark.pandas) provides apandas-like porcelain on top of PySpark.

- Koalas (now called

Google Drive on Google Colab

drive.mount('/content/drive')- To mount your Google Drive on Google colab:

files.upload()- To initiate uploading a file on Google Drive:

- To find a pathname of a CSV file in Google Drive:

- Click 📁 from the sidebar menu

drive➡️MyDrive…- Hover a mouse cursor on the CSV file

- Click the vertical dots

- Click “Copy path”

Spark DataFrame vs. Pandas DataFrame

- What makes a Spark DataFrame different from other DataFrame?

- Spark DataFrames are designed for big data and distributed computing.

- Spark DataFrame:

- Data is distributed across a cluster of machines.

- Operations are executed in parallel on multiple nodes.

- Can process datasets that exceed the memory of a single machine.

- Other DataFrames (e.g., Pandas):

- Operate on a single machine.

- Entire dataset must fit into memory.

- Limited by local system resources.

Spark DataFrame vs. Pandas DataFrame

Lazy Evaluation and Optimization

- Spark DataFrame:

- Uses lazy evaluation: transformations are not computed until an action is called.

- Optimize query execution.

- Other DataFrames:

- Operations are evaluated eagerly (immediately).

- No built-in query optimization across multiple operations.

Spark DataFrame vs. Pandas DataFrame

Scalability

- Spark DataFrame:

- Designed to scale to petabytes of data.

- Utilizes distributed storage and computing resources.

- Ideal for large-scale data processing and analytics.

- Other DataFrames:

- Best suited for small to medium datasets.

- Limited by the hardware of a single computer.

Spark DataFrame vs. Pandas DataFrame

Fault Tolerance

- Spark DataFrame:

- Built on Resilient Distributed Datasets (RDDs).

- Automatically recovers lost data if a node fails.

- Ensures high reliability in distributed environments.

- Other DataFrames:

- Typically lack built-in fault tolerance.

- Failures on a single machine can result in data loss.

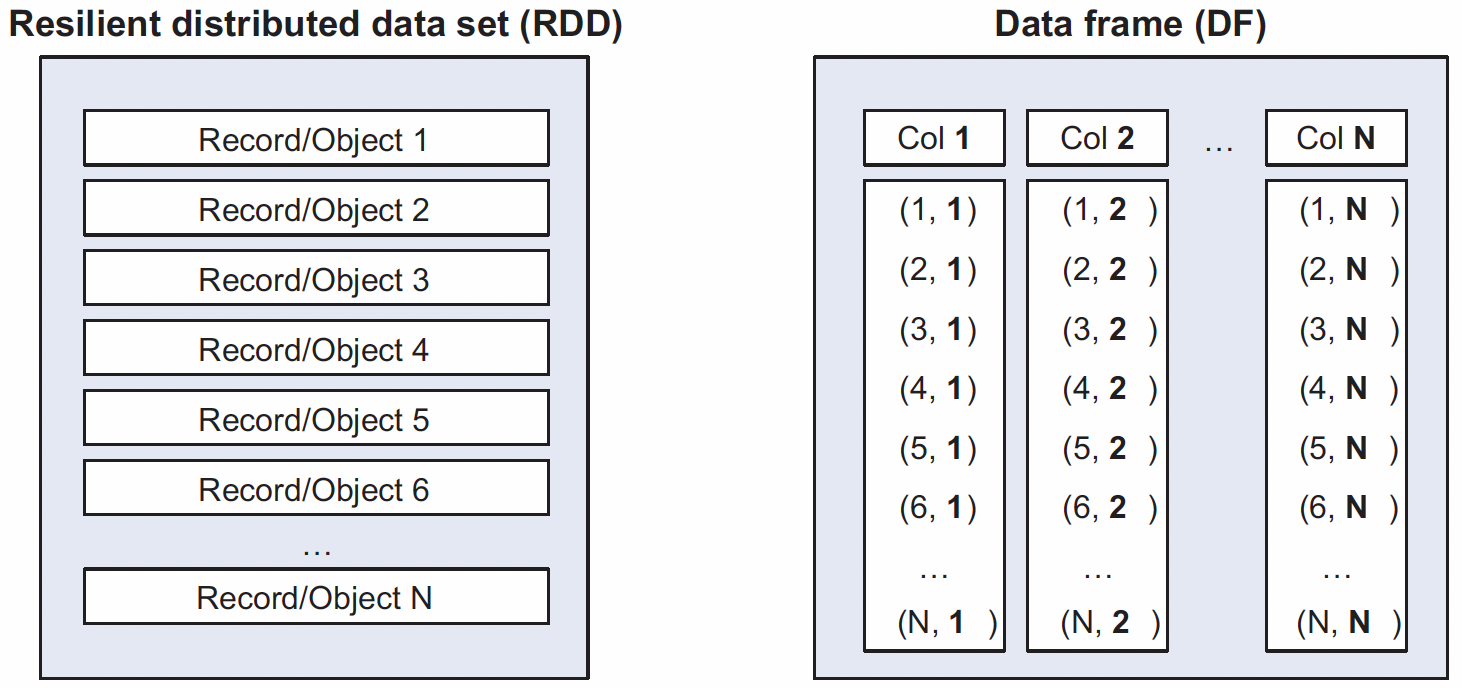

RDD and PySpark DataFrame

- In the RDD, we think of each row as an independent entity.

- With the

DataFrame, we mostly interact with columns, performing functions on them.- We still can access the rows of a

DataFramevia RDD if necessary.

- We still can access the rows of a